Do competitive analysis faster with Askpot

Skip the manual busywork. Use Askpot to discover competitors, mine reviews, analyze websites, and synthesize findings into decision-ready reports in minutes, not weeks.

Get started for free

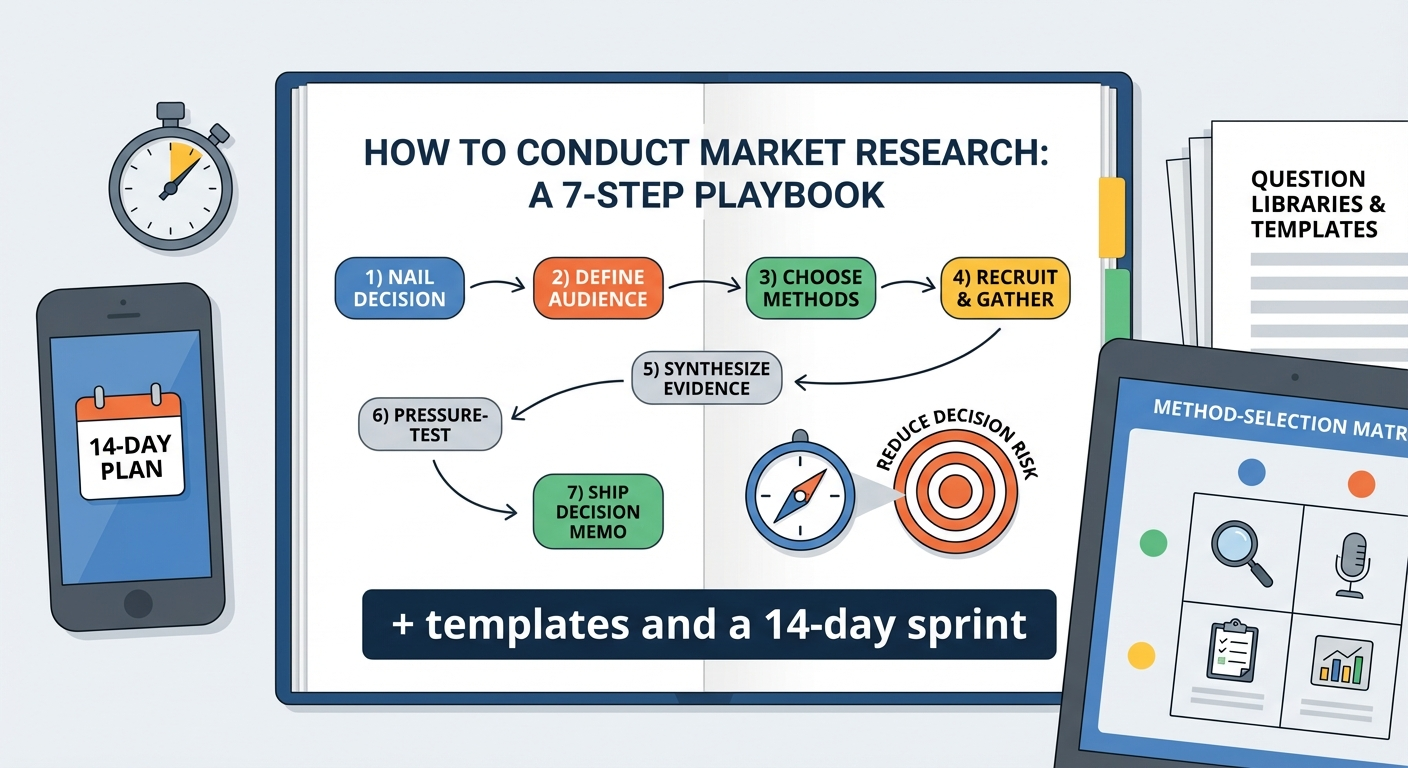

How to Conduct Market Research: a 7‑Step Playbook (+ templates and a 14‑day sprint)

If you’re busy and need the TL;DR: market research is only useful if it reduces decision risk. Start with a single decision, define what you must learn to make it with confidence, pick the minimum‑viable methods, then collect just enough evidence to act. Below you’ll find a practical, decision‑first process, a method‑selection matrix, a 14‑day plan, question libraries, and templates you can copy.

Quick answer (the 7 steps):

- Nail the decision and hypotheses.

- Write one decision in plain language, attach time and owner, then translate it into falsifiable hypotheses. Define what would change your mind.

- Define the audience and sampling frame.

- Specify who qualifies and how you’ll reach them. Estimate incidence and feasibility before you commit.

- Choose methods (primary vs. secondary) with a clear success threshold.

- Select the fastest method that can move you from “uncertain” to “enough confidence,” and define pass/fail criteria up front.

- Recruit participants and gather data fast.

- Use 2–3 channels in parallel. Pilot once, then scale.

- Synthesize evidence into insights.

- Code and theme data; tie every insight to a source and a strength rating.

- Pressure‑test recommendations and pricing.

- Try to break your own conclusion with counter‑evidence, rivals’ claims, and a quick live test.

- Ship a one‑page decision memo and operational next steps.

- Decisions don’t count until they change the roadmap, copy, or price. Assign owners, dates, and success metrics.

Start with the decision, not the method

Before you “do research,” write a single decision question your team must answer within 2–4 weeks.

Examples:

- Should we enter Segment X this quarter?

- Which value proposition should headline our new landing page?

- What price tiering should we test for the Pro plan?

Turn that into falsifiable hypotheses. Example: “Ops managers at 50–250 FTE companies will pay $79/month for automated compliance reports if it saves 4+ hours/month.” Your research only collects evidence to validate or refute these statements.

Deliverable: a one‑page research brief

- Decision we must make by [date]

- Hypotheses (H1…H3)

- Audience (must-have traits)

- Evidence needed to decide (ranked)

- Constraints (budget/time/sample)

The one‑decision rule

- If you have two decisions, you have zero. Force‑rank decisions by business impact and reversibility. Pick the highest impact, easiest‑to‑reverse decision first.

- Write the decision like a headline with a date: “By March 15, choose price tiering for Pro.” Ambiguity is the enemy of speed.

Hypothesis cookbook (copy/paste)

- Who: ICP descriptor + qualifiers (role, company size, geo, recency).

- What: behavior or willingness (buy, switch, pay, adopt).

- Why: core job-to-be-done or pain.

- Threshold: numeric trigger that means “we act.” Example: “US‑based controllers at SaaS firms (50–250 FTE) will switch from spreadsheets to our close checklist if it reduces month‑end close by ≥20%.”

Success thresholds and decision yardsticks

- Define “go” before you gather data: e.g., “≥70% of qualified buyers rank Value Prop A #1,” or “Price acceptability window includes $59–$79 with ≥60% ‘expensive but acceptable.’”

- Confidence rubric:

- High: converging qual + quant + behavior; expected ROI is 3x research cost.

- Medium: converging qual/quant but missing behavior; action with small pilot.

- Low: conflicting or thin data; defer or redesign test.

Stakeholder kickoff (30 minutes)

- Participants: decision owner, PMM/PM, Sales/Success lead, Finance (for pricing), Research lead.

- Agenda: confirm the decision, hypotheses, constraints, and what “done” looks like. Log assumptions worth testing later.

Audience and sampling, fast and scrappy

Define: who qualifies, who disqualifies, and how you’ll find them.

- B2B recruiting channels: customer lists, LinkedIn filters, communities/Slack groups, conference attendee lists, vendor review platforms.

- B2C recruiting channels: your email list, social DMs, subreddits, interest groups, intercept on site/app.

Screening tips:

- Verify role and recency (e.g., “Purchased/used a [category] tool in last 6 months?”).

- Avoid “professional survey takers” with simple trap questions.

- Incentives: $50–$150 for 30–45 min B2B; $10–$40 B2C depending on complexity.

Incidence, feasibility, and sample math (fast heuristics)

- Incidence rate = qualified respondents / total respondents screened.

- Back‑of‑napkin planning: expected completes ≈ invitations × response rate × incidence.

- Margin‑of‑error rule‑of‑thumb for surveys on proportions:

- n≈100 → ±10%; n≈250 → ±6%; n≈400 → ±5% (at ~95% confidence). Good enough for directional pricing and messaging.

Segmenting respondents vs. buyers

- Not every user is a buyer. Define both: the economic buyer, the technical evaluator, and the end user. Recruit at least some of each if the decision spans them.

Screener pitfalls to avoid

- Vague recency windows (“recently evaluated”); prefer time‑boxed (“in last 90 days”).

- Leading “would you” questions; instead confirm past behavior (“Which of these tools did you trial in the last 6 months?”).

- Single‑channel recruiting; blend 2–3 channels to reduce bias.

Incentive logistics

- Pay promptly (within 48 hours) to keep show rates high.

- Offer choice (gift cards, charitable donation, PayPal). For employees/customers, check policy compliance.

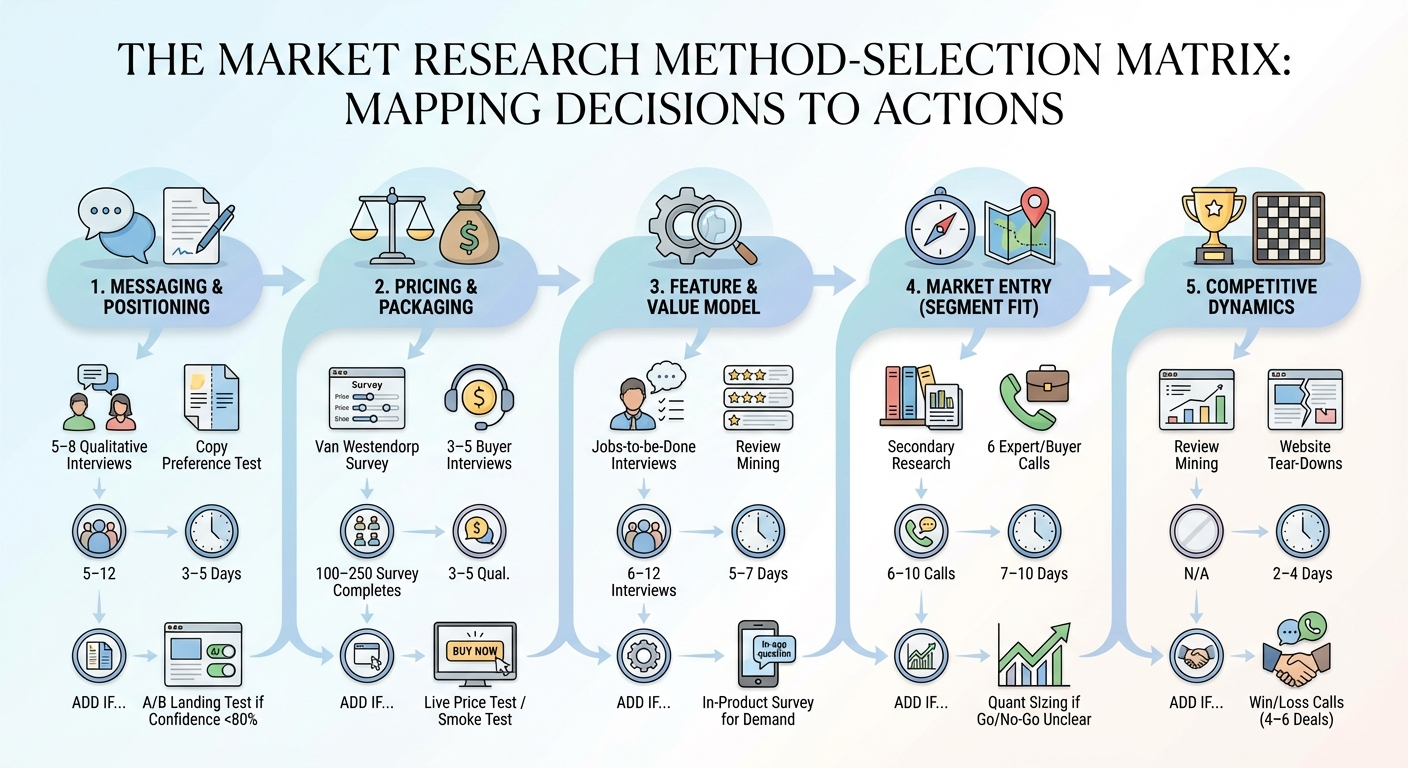

Method‑selection matrix

Notes:

- Secondary research (official datasets, trade and industry reports) frames markets and trends; primary research (interviews/surveys/behavioral data) reduces uncertainty about your specific users and offer. See the U.S. International Trade Administration’s stepwise approach to secondary data and country/industry analysis for market sizing and prioritization. Source: trade.gov (U.S. Department of Commerce).

How to read and use the matrix

- Start with the left column; pick the minimal method that can falsify your leading hypothesis.

- Set a “stop rule”: e.g., “If after 8 interviews themes still diverge, switch to a quick survey to arbitrate.”

Quality checks by method

- Interviews: confirm role, confirm recency, ask for artifacts (screenshots, invoices).

- Surveys: soft‑launch (n=25–50), remove straight‑liners, add time‑on‑page checks.

- Review mining: tag by segment/use case; avoid over‑weighting extreme reviews.

When to escalate methods

- If a decision carries high irreversibility (e.g., annual contracts), graduate from preference test → A/B → pilot in a low‑risk segment.

The 14‑day “good‑enough” research sprint

- Day 1: Decision brief + hypotheses + stakeholder kickoff.

- Day 2: Screeners, outreach, incentives set; compile secondary sources (industry/government, competitor sites).

- Day 3–5: 6–10 interviews (30–45 min). Parallel review mining of competitors and forums.

- Day 6: Build a 12–16 question survey (if needed). Soft‑launch for data quality.

- Day 7–8: Field survey to target completes; continue interviews if gaps remain.

- Day 9: Theming/coding of transcripts and reviews; build evidence table (quotes, metrics, links).

- Day 10: Pricing analysis (if applicable): Van Westendorp ranges; identify “too cheap/expensive,” “optimal price point.”

- Day 11: Synthesize: 5–7 insight statements with strength ratings and implications.

- Day 12: Draft 1‑page decision memo; map to OKRs/experiments.

- Day 13: Stakeholder review; resolve disagreements with additional evidence/tasks.

- Day 14: Final decision and plan; book post‑launch validation.

Day‑by‑day pro tips

- Days 1–2: Pre‑write your interview guide and survey logic. Set calendar blocks for synthesis before fieldwork ends.

- Days 3–5: Record with consent. Use a note‑taking grid with columns: Trigger, Pain, Outcome, Quote, Implication.

- Days 6–8: Keep surveys under 5–7 minutes. Include one attention check and one open‑ended “why” question per priority.

- Day 9: Do a rapid affinity map. Cluster quotes, label themes, count mentions by segment.

- Day 10: Calculate Van Westendorp intersections and annotate guardrails (see “Field‑tested pricing in days”).

- Days 11–12: Each insight must include “So what” → recommendation → expected impact.

- Days 13–14: Run a pre‑mortem: “If this decision fails in 90 days, why?” Turn top risks into follow‑ups.

Question libraries you can copy

Interview (buyers; 30–45 min)

- Trigger: “Walk me through the last time you looked for [category]—what kicked it off?”

- Alternatives: “What else did you consider, and why?”

- Success metric: “How do you know [job] is done well?”

- Objections: “What nearly made you say no?”

- Willingness to pay: “At what point would price make this a non‑starter? Why?”

Survey (pricing: Van Westendorp core)

- At what price would you consider the product to be so expensive that you would not consider buying it?

- At what price would you consider the product to be expensive but still worth considering?

- At what price would you consider the product to be a bargain?

- At what price would you consider the product to be so cheap that you’d question its quality?

Messaging preference (pairwise)

- “Which statement best describes the benefit?” Show 2–3 value props; capture reason why.

Guardrails (to avoid bias)

- Use open questions before lists.

- Don’t anchor with your solution too early.

- Separate “what happened” from “what you think.”

Competitor review mining (e.g., G2, Capterra, forums)

- Extract: liked, disliked, “wish it did,” switching reasons.

- Tag by segment and use case.

- Summarize as jobs, pains, gains.

Extra prompts you can plug in today

- Switching story: “What finally made you leave your previous tool, and what did you hope would be different?”

- Budget reality: “Whose budget does this come from? What do you stop paying for to fund it?”

- Implementation risk: “What would keep this from being fully adopted?”

- Value proof: “If we disappeared tomorrow, how would your day get worse?”

- Friction: “What almost derailed rollout? Be specific.”

Interview flow and tone

- Start with context, then last behavior, then desired outcomes, then objections, then pricing. Keep opinions for the end.

- Use neutral mirroring and “tell me more.” Avoid pitching.

Survey add‑ons (only if needed)

- Top‑2 box intent: “How likely are you to consider [concept]?” (Very/Somewhat likely).

- Feature priority: MaxDiff across 8–12 benefits; limit to one MaxDiff block to keep survey short.

- Segment tags: simple self‑ID (“I am primarily a… [buyer/user/executive]”).

Turning raw data into decisions

Create five lightweight artifacts:

- Codebook: short labels for repeated concepts.

- Theme map: 5–10 themes with counts and exemplar quotes.

- Evidence table: insight → supporting quotes/data → confidence rating.

- Pricing chart: VWA intersections and recommended tier boundaries.

- One‑page decision memo (below).

Decision memo template

- Decision:

- Option considered (A/B/C) and why:

- Evidence summary (top 5 insights, links):

- Risks and unknowns:

- Recommendation and next experiments:

- Owners and timeline:

Confidence ratings rubric (simple and defensible)

- High: multiple sources align (qual + quant + behavior), low variance across segments.

- Medium: sources align but rely on attitudes; pilot recommended.

- Low: sources conflict or sample suspect; pause and redesign.

From insight to experiment

- Each insight must map to one experiment with a success metric, timeframe, and owner. Example: “Switch H1 on landing page to ‘Audit‑ready in minutes’ and target +12% uplift in qualified signups within 14 days.”

Repository hygiene

- Store transcripts, notes, and survey exports with dates, segments, and consent status. Tag insights with version numbers so you can update, not rewrite.

Practical recruiting and incentive tips

- B2B: start with your pipeline and closed‑lost contacts; partner with communities; offer $100+ or charity donations for 30–45 min.

- B2C: use social stories, intercepts, and friends‑of‑friends—but screen strictly; $15–$30 is typical for 10–15 min surveys.

Outreach scripts (steal these)

- Email/DM opener: “We’re making decisions about [X] this month and would value your candid feedback. 30–45 min, $100 thank‑you. Are you open to a brief conversation next week?”

- Customer ask: “As part of improving [product], we’re speaking with 6–8 customers who [did action]. Can we book 30 minutes? We’ll share what we learned.”

Scheduling and show‑rate boosts

- Offer 3 time windows, send calendar holds, remind 24 hours before with the incentive reiteration and reschedule link. Expect 10–20% no‑shows; over‑recruit by 1–2.

Field‑tested pricing in days

- Run a Van Westendorp survey (100–250 qualified respondents) to set guardrails.

- Combine with 3–5 buyer interviews to understand price/value tradeoffs.

- Validate with a live smoke test (landing page + checkout/onboarding step) to observe actual behavior before rollout.

Van Westendorp in practice (quick math)

- Plot cumulative percentages for the four questions; compute intersections:

- Point of Marginal Cheapness (PMC) = “too cheap” vs. “bargain.”

- Point of Marginal Expensiveness (PME) = “too expensive” vs. “expensive.”

- Optimal Price Point (OPP) = intersection of “too cheap” and “too expensive.”

- Indifference Price Point (IPP) = intersection of “bargain” and “expensive.”

- Recommend a price inside PMC–PME, near OPP, then test live with a small cohort.

Alternatives if you need more precision

- Gabor‑Granger: simple willingness‑to‑pay curve across discrete price points.

- Conjoint (only if stakes are high): attribute trade‑offs for packaging; more effort, more nuance.

Common pitfalls and how to avoid them

- Vague scope: lock one decision; cut everything else.

- Leading questions: ask about the last real behavior, not hypotheticals.

- Bad samples: screen for recency and decision power.

- Over‑index on opinions: triangulate with revealed behavior (analytics, funnels, live tests).

- “Insights” with no action: force every insight to one experiment, one owner, one date.

More anti‑patterns to watch

- Paralysis by analysis: if two options are statistically tied, pick the simpler one and ship a follow‑up test.

- Vanity samples: respondents who love you aren’t representative; balance with cold audiences.

- Over‑sized surveys: beyond 7 minutes completion rates fall and data quality drops.

Secondary research you should actually use

- Government/official sources for market and trade context (industry sizes, growth, regulations), then refine with primary research on your audience and offer. See the International Trade Administration’s step‑by‑step framework for using trade data, country reports, and market selection. Source: trade.gov (U.S. Department of Commerce).

Practical use of secondary data

- Start with total addressable market ranges, regulatory constraints, and trend lines. Then zoom into realistic serviceable market by applying your ICP filters.

- Cross‑reference with competitor disclosures (pricing pages, case studies) to spot positioning whitespace you can test in primary research.

Templates

Mini research brief

- Decision by: [Date]

- Hypotheses: H1, H2, H3

- Audience: [Title/Segment/Size/Geo]

- Methods: [Interviews: 8] [Survey: 150 completes] [Review mining]

- Success threshold: e.g., “≥70% of ICPs rank Value Prop A #1”

- Risks: [e.g., low incidence rate]

Interview screener (B2B)

- Role: Must influence or decide purchase of [category]

- Company size: [range]

- Activity: Evaluated or bought [category] in last 6 months (Yes/No)

- Disqualifiers: Students, agencies reselling the category, vendors in category

Evidence table (example row)

- Insight: Ops managers care more about “audit‑ready reports” than “dashboards.”

- Evidence: 7/10 interviews cited audits; review mining: 23 mentions; survey: 62% ranked “compliance” #1.

- Confidence: High

- Action: Move “audit‑ready” to H1, create audit sample report.

Extra templates (drop‑in)

- Recruitment tracker: Name | Segment | Source | Qualified? | Scheduled | Completed | Incentive sent.

- Interview guide skeleton: Intro/consent (2m) → Trigger story (8m) → Alternatives (6m) → Outcomes (6m) → Objections (6m) → Pricing (6m) → Wrap (1m).

- Survey QA checklist: logic works, attention check, device test (mobile), 5–7 minute target, soft‑launch pass.

Where AskPot accelerates this process (and where it doesn’t)

AskPot is built for fast, source‑backed market and competitive research. Use it to:

- Discover competitors automatically and assemble side‑by‑side profiles (positioning, pricing, features).

- Mine public reviews to extract “loved,” “hated,” and “most‑wanted” lists.

- Analyze a company website for ICP, UVP, and messaging patterns.

- Coordinate and synthesize findings into structured reports.

Great fit:

- Day 2–5 of the sprint for competitor discovery, review mining, and website/message analysis.

- Drafting initial insight statements and evidence tables from public sources.

What still needs humans or specialized tools:

- Live customer interviews and nuanced probing.

- Running surveys/panels and pricing tests.

- Final judgment calls when evidence conflicts.

Learn more about AskPot’s capabilities on its homepage (company analysis, reviews analysis, competitors search, user interviews). It’s designed to shorten the “manual busywork” so you spend time on decisions, not data collection.

Tips to get the most from AskPot

- Feed it seed competitors and ICP descriptors to improve discovery.

- Export structured summaries into your evidence table; keep raw links for auditability.

Example 2‑week calendar you can adopt tomorrow

- Mon (wk1): Kickoff; finalize brief and screeners; launch secondary research; publish call for participants.

- Tue: First 3 interviews; begin review mining; draft survey if needed.

- Wed: 3–4 more interviews; pilot survey (n=25).

- Thu: Field survey to target (n=150–200); continue interviews.

- Fri: Close interviews; start coding/themes; compile competitor matrix.

- Mon (wk2): Pricing analysis; build evidence table and theme map.

- Tue: Draft decision memo; define experiments (landing test, pricing trial).

- Wed: Stakeholder review; resolve gaps with 1–2 targeted calls.

- Thu: Final decision; align owners and dates.

- Fri: Share learnings; schedule 30‑day validation.

Owner and meeting cadence (lightweight)

- Daily 15‑minute stand‑up: blockers, today’s interviews, synthesis progress.

- Two gates: Gate 1 after Day 5 (enough qual?) and Gate 2 after Day 10 (pricing/messaging decision ready?).

Frequently Asked Questions

Turn this playbook into

a real analysis in Askpot

Spin up a workspace for your own competitive research. Discover competitors automatically, mine reviews for insights, analyze landing pages, and export copy-ready comparison tables for stakeholders in minutes.